Some thoughts on non-probability, aka opt-in, survey panels

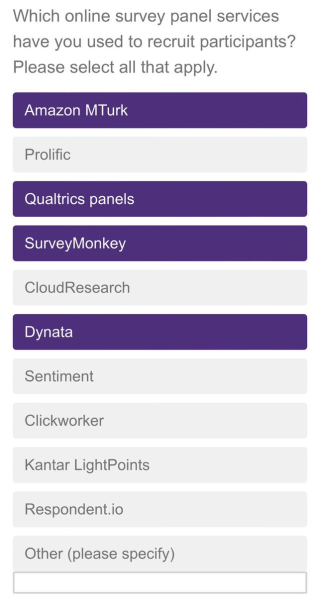

I participated in a survey conducted by Clemson this week. I was eligible because I had published a paper using opt-in panel data at some point. I posted the image to the right to twitter and proceeded to provide a brief review of what I thought about each of the panels I’ve used. I’ve been thinking about that and want to say more. During the rare times I’ve had enough money in the research budget I’ve used KN/GfK/Ipsos‘s Knowledge Panel (KP). KP is a probability-based sample and more representative of the population than opt-in panels. Opt-in panels are basically convenience samples. There are interesting research questions about if and when researchers should use opt-in panels. A forthcoming Applied Economics Policy and Perspectives symposium is a step in that direction (here is the second, I think, of four articles to appear online).

The first time that I enjoyed a probability-sample was when I was working on Florida’s BP/Deepwater Horizon damage assesssement with Tim and others. We had plenty of funding for two (!) KP surveys and two articles have been published (one and two [the first, I think, of the AEPP articles to appear online). The second time was a few years ago with funding from the state of North Carolina where Ash Morgan and I looked at the invasive species Hemlock Wooly Adelgid (HWA) and western North Carolina forests. I’ve presented papers from that study at a couple of conferences and UNC – Asheville but nothing publishable. I hope to write the forest paper this summer because it boasts the same coincidental design as the second published paper above. GfK supplemented the KP sample with opt-in responses (while charging us the same price per unit) so there is a data quality comparison between probability-based and opt-in samples. In the second published AEPP paper with a single binary choice question we find that the opt-in data was lower quality. In the HWA study we aren’t finding many differences. In other words, the opt-in data is as good as the probability-based data.

I think that these opt-in panels will be increasingly used in the future and we need to figure out how best to use them. Opt-in data are much less expensive. For example, a Dynata recreational user respondent cost me $5 in a February 2023 survey. A KP recreational user cost $35 per unit. Of course, KN/GfK programmed the survey while I program my own when using the Dynata panel but programming yourself doesn’t cost much more when you are writing the questions and trying to explain how to do it to KN/GfK. One known problem with opt-in panels is that you don’t get a response rate but it is a toss up whether no response rate is worse than a response rate of less than 10% from a mail survey. The good thing about a mail survey is that you know what sort of bias your data will suffer from (sample selection). I don’t have an estimate of the cost of a mail survey but it is much higher than $3.50 when the response rate is less than 10%.

I attended this workshop where four of us provided comments on five stated preference studies funded by the EPA that have been published by PNAS. Each of these studies was multi-year and used focus groups, pretests and probability-based sample data. The time and money cost was very high. During the discussion one of the exhausted researchers involved in those studies asked how we economists could go from these great but unlikely-to-be-useful-for-policy-analysis (my words) studies to something that would be useful for policy analysis. The audience was stumped for a second and then I realized that I had an answer. The long-term answer, I think, is taking the lessons from these huge studies and developing benefit estimates with models from opt-in data. You can go do this within one year with opt-in data and a single pretest relative to 3-5 years for a major study. The test, I think, is whether the results from models using opt-in data is better than benefit transfer, which is how most policy analysis is being done.

I think the answer is yes (opt-in data models are better than benefit transfer). The second of the published AEPP articles above resulted from a pretest of the PNAS studies. It’s conclusion was that opt-in data wasn’t so bad. I’m hoping to contribute to the opt-in data is good enough for policy literature by thinking about the role of attribute non-attendance in analyzing opt-in data (more on this soon, I hope). We need more studies like these to convince a skeptical bunch of environmental economists and, especially, OMB that policy anlaysis will be improved if we don’t always rely on million dollar studies.